Method

Key Idea:

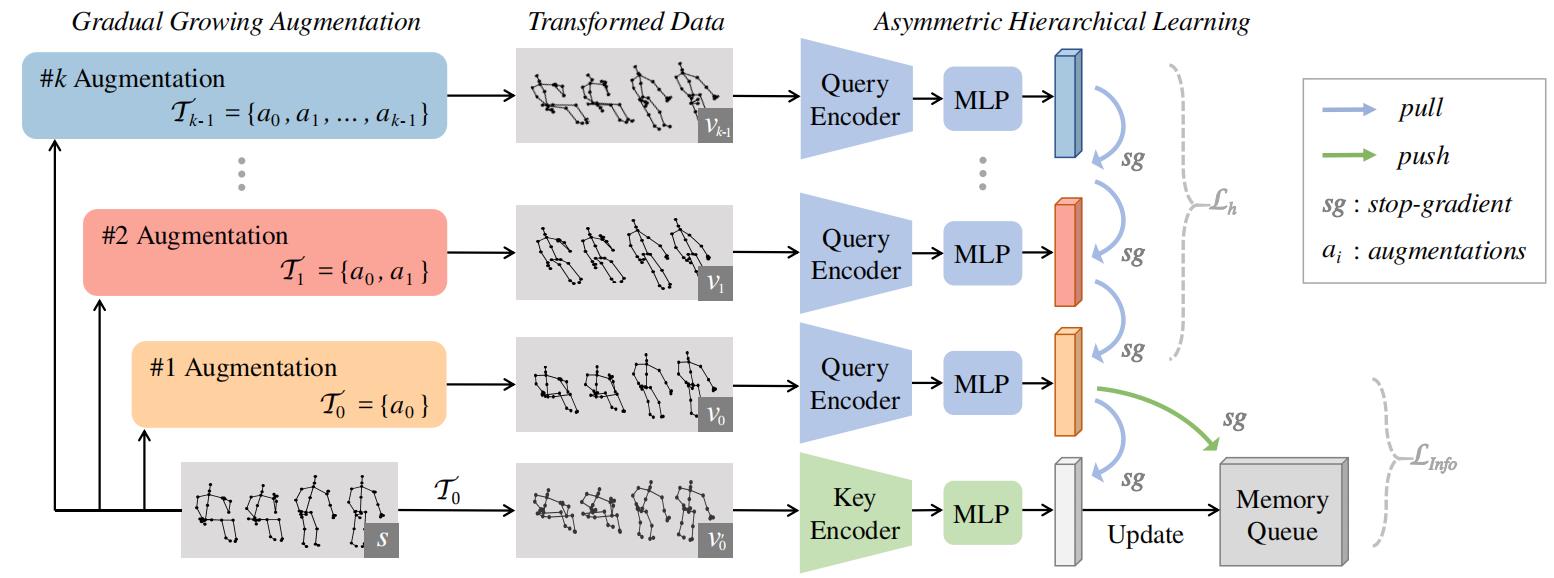

(1) Growing Augmentation. All data augmentations are divided into multiple sets. We apply these sets progressively to the skeleton data to generate the augmented samples corresponding to different augmented strengths.

(2) Hierarchical Consistent Learning. Then, the model modelling the consistency of these positive pairs by constraining the similarity of the samples generated by the augmentations with adjacent strengths.

Figure 1. The overview architecture of the proposed HiCLR. There are k branches corresponding to the different augmentation invariance learning. We employ a growing augmentation strategy to generate multiple highly correlated positive pairs corresponding to different augmented strengths. The augmented views are encoded via the query/key encoder and projector. Meanwhile, a hierarchical self-supervised loss is proposed to align the feature distributions of adjacent branches, which is optimized jointly with the InfoNCE loss.

Results

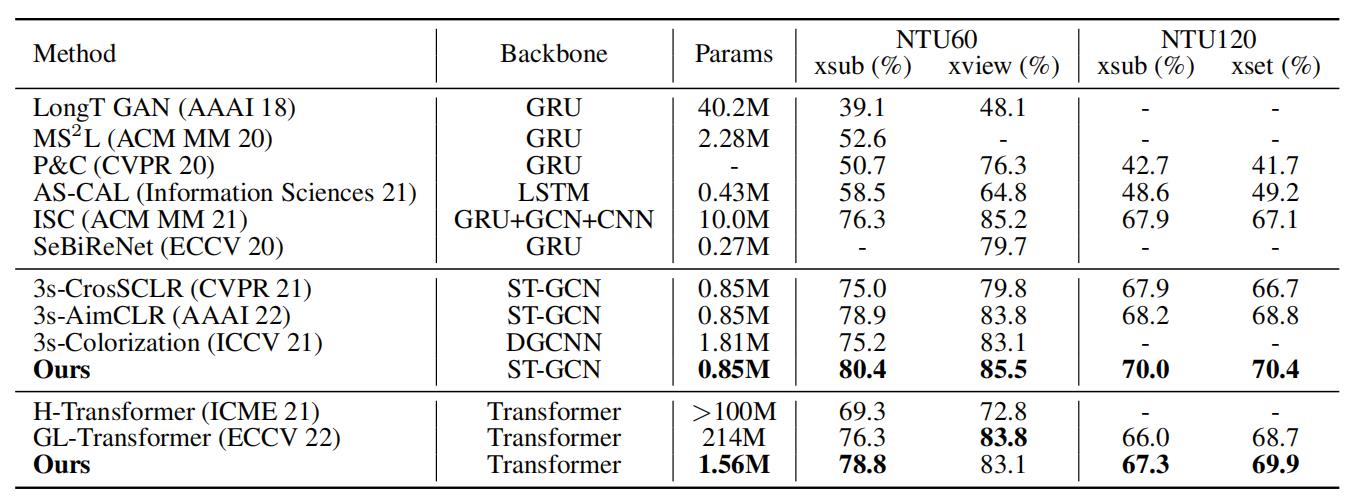

Table 1. Linear evaluation results on NTU60 and NTU120 datasets.

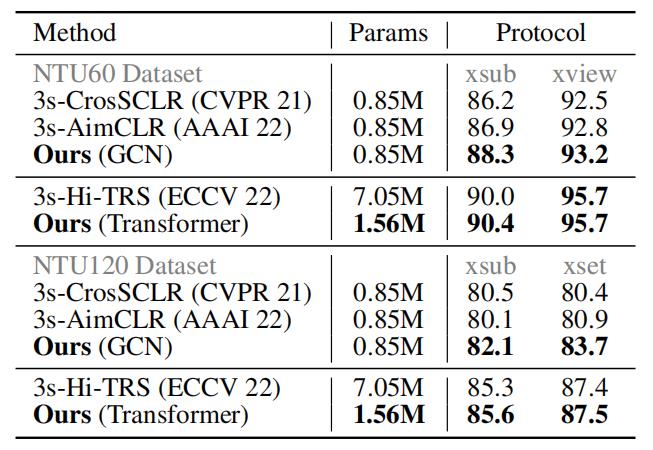

Table 2. Supervised evaluation results on NTU60 and NTU120 datasets.

Resources

Citation

@article{zhang2023hierarchical,

title={Hierarchical Consistent Contrastive Learning for Skeleton-Based Action Recognition with Growing Augmentations},

author={Zhang, Jiahang and Lin, Lilang and Liu, Jiaying},

booktitle={Proceedings of the AAAI Conference on Artificial Intelligence},

year={2023},

}

Reference

[1] Liu, J.; Song, S.; Liu, C.; Li, Y.; and Hu, Y. A benchmark dataset and comparison study for multi-modal human action analytics. TOMM 2020.

[2] Shahroudy, A.; Liu, J.; Ng, T.-T.; and Wang, G. Ntu rgb+ d: A large scale dataset for 3d human activity analysis. CVPR 2016.

[3] Liu, J.; Shahroudy, A.; Perez, M.; Wang, G.; Duan, L.-Y.; and Kot, A. C. NTU RGB + D 120: A large-scale benchmark for 3D human activity understanding. TPAMI 2019.

[4] Guo, T.; Liu, H.; Chen, Z.; Liu, M.; Wang, T.; and Ding, R. Contrastive Learning from Extremely Augmented Skeleton Sequences for Self-supervised Action Recognition. AAAI 2022.

[5] Lin, L.; Song, S.; Yang, W.; and Liu, J. MS2L: Multi-task self-supervised learning for skeleton based action recognition. ACM MM 2020.

-

Return to the STRUCT Project