IJCAI 2024

Shap-Mix: Shapley Value Guided Mixing for Long-Tailed

Skeleton Based Action Recognition

Abstract

In real-world scenarios, human actions often fall into a long-tailed distribution. It makes the existing skeleton-based action recognition works, which are mostly designed based on balanced datasets, suffer from a sharp performance degradation. Recently, many efforts have been made to image/video long-tailed learning. However, directly applying them to skeleton data can be sub-optimal due to the lack of consideration of the crucial spatial-temporal motion patterns, especially for some modality-specific methodologies such as data augmentation. To this end, considering the crucial role of the body parts in the spatially concentrated human actions, we attend to the mixing augmentations and propose a novel method, Shap-Mix, which improves long-tailed learning by mining representative motion patterns for tail categories. Specifically, we first develop an effective spatial-temporal mixing strategy for the skeleton to boost representation quality. Then, the employed saliency guidance method is presented, consisting of the saliency estimation based on Shapley value and a tail-aware mixing policy. It preserves the salient motion parts of minority classes in mixed data, explicitly establishing the relationships between crucial body structure cues and high-level semantics. Extensive experiments on three large-scale skeleton datasets show our remarkable performance improvement under \textit{both long-tailed and balanced} settings.

Key Idea

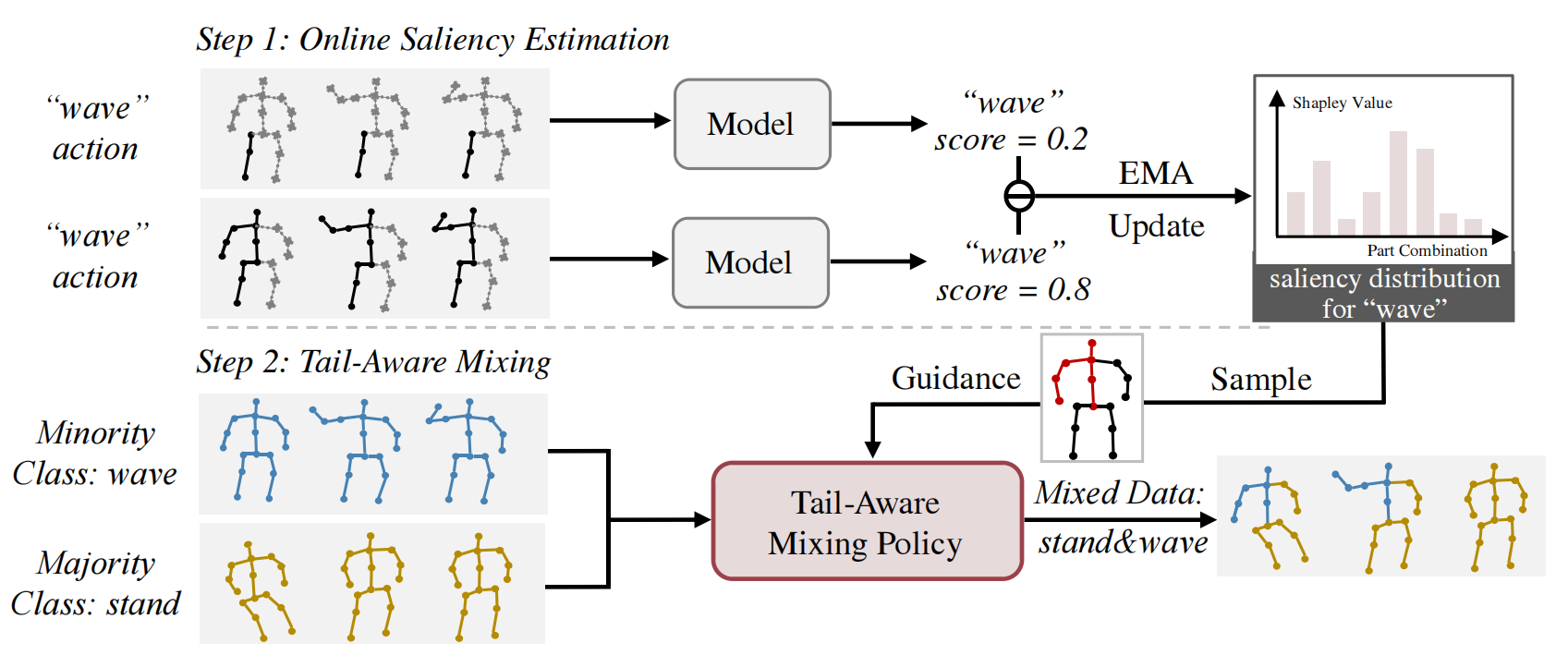

(1) Part Saliency Estimation Based on Shapley-Value. obtain the saliency map of the skeleton joints first relying on the Shapley-Value in the cooperative game theory.

(2) Tail-Aware Mixing Synthesis. Based on the obtained saliency map, we guide the synthesis of more representative mixed samples for the boundary learning of tail categories.

Figure 1. A simplified illustration of Shap-Mix. We first perform the online saliency estimation using Shapley-Value. For dotted joints, we use the mean of the dataset as the static sequence. The calculated Shapley value is used to update the Shapley value list vc by EMA. Finally, the mixed data is generated, preserving the representative motion patterns of the minority class (wave in this example).

Selected Experimental Results

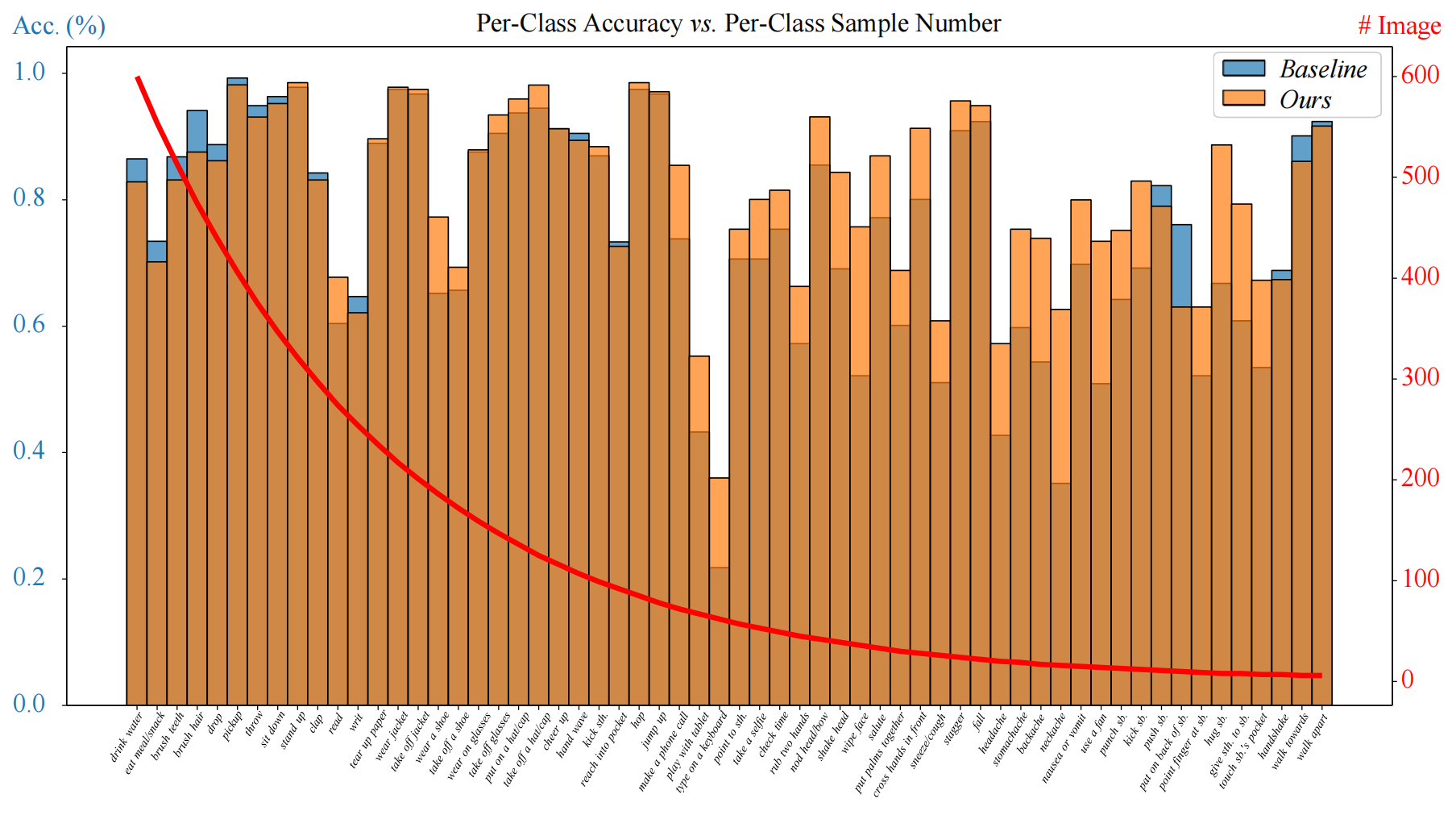

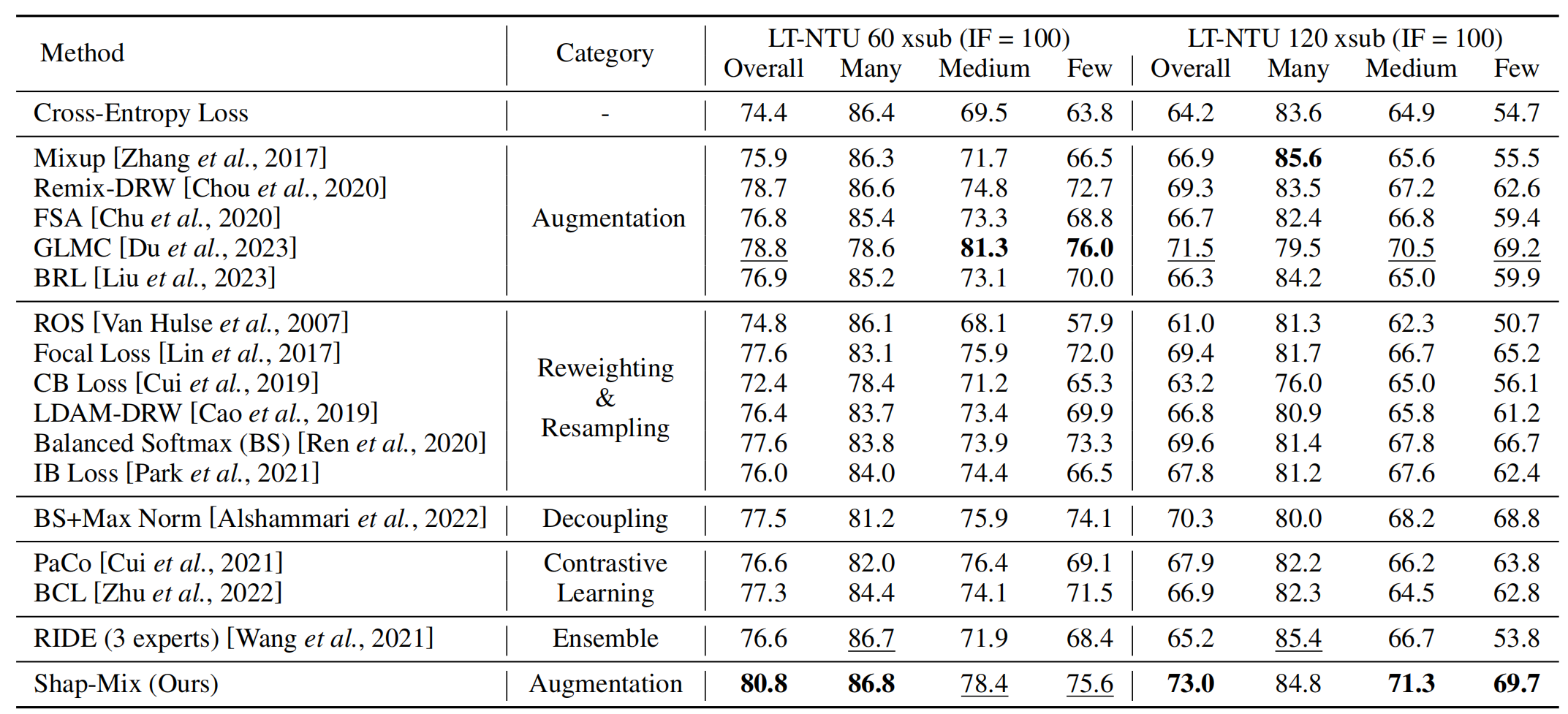

Table 1. Performance comparison of long-tailed skeleton-based action recognition with single joint stream. IF is the imbalance factor. Top-1 accuracy (%) is reported. The results with bold and underline indicate the highest and second-highest value.

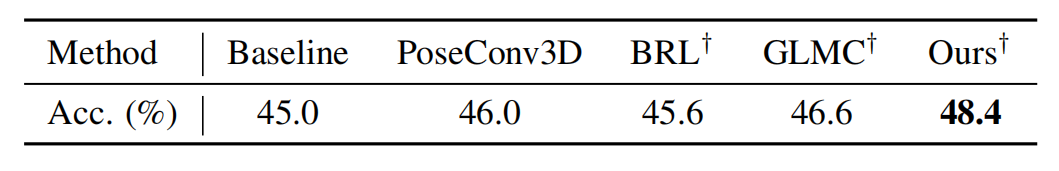

Table 2. Comparison results on Kinetics 400 of single joint stream.

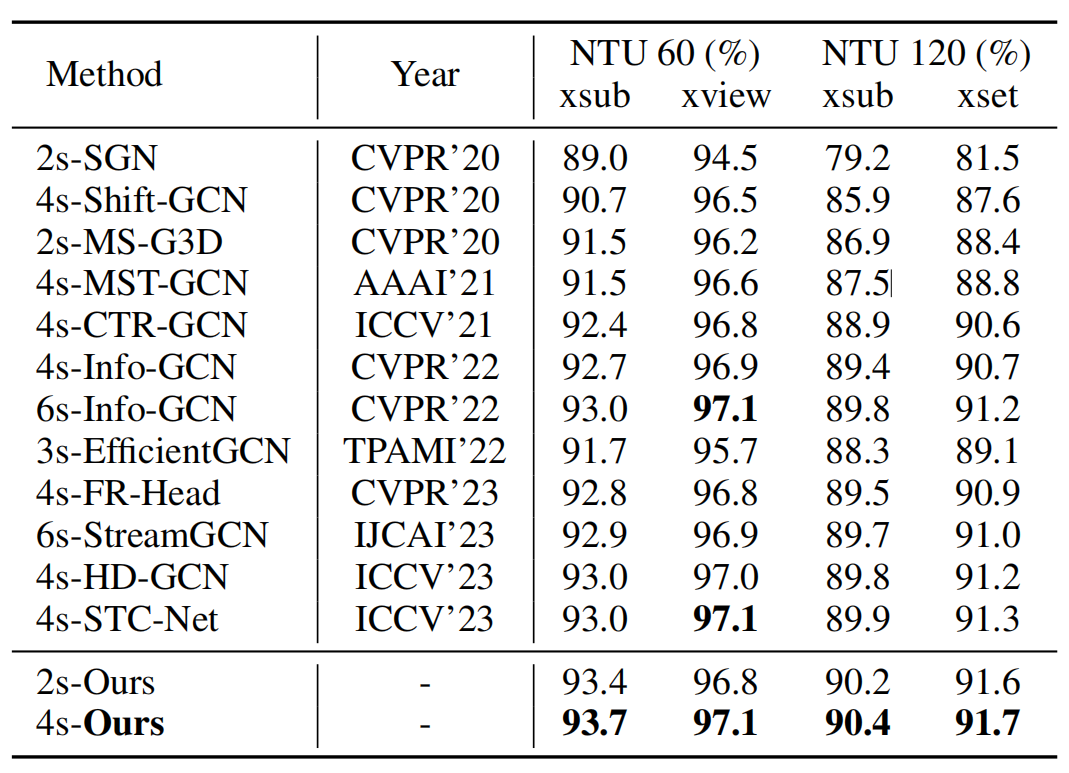

Table 3. Performance comparison of balanced recognition on NTU datasets in top-1 accuracy. *s- means the fusion results of * streams.

Table 3.Visualization results of the Shapley value guided saliency estimation on LT-NTU 60 dataset. The first, second, and the third rows are the actions from many-, medium-, few-shot classes, respectively, where the top 5 most salient parts are given. Note that the Shapley value is normalized and the average saliency is 0.05

Citation

@InProceedings{Shap_Zhang24,

author = {Zhang, Jiahang and Lin, Lilang and Liu, Jiaying},

title = {Shap-Mix: Shapley Value Guided Mixing for Long-Tailed Skeleton Based Action Recognition},

booktitle = {International Joint Conference on Artificial Intelligence (IJCAI)},

year = {2024}

}